(LSJ) Denario is Silicon based Researcher

/https://huggingface.co/spaces/astropilot-ai/Denario

(LSJ) Lifetime Scope Journal. The Business Magazine from Lifetime Studios since 2006.

https://huggingface.co/spaces/astropilot-ai/Denario

Liiketoiminnan tehostaminen tekoälyllä

jatkuu..

Tekoäly liiketoiminnan kehittämisessä

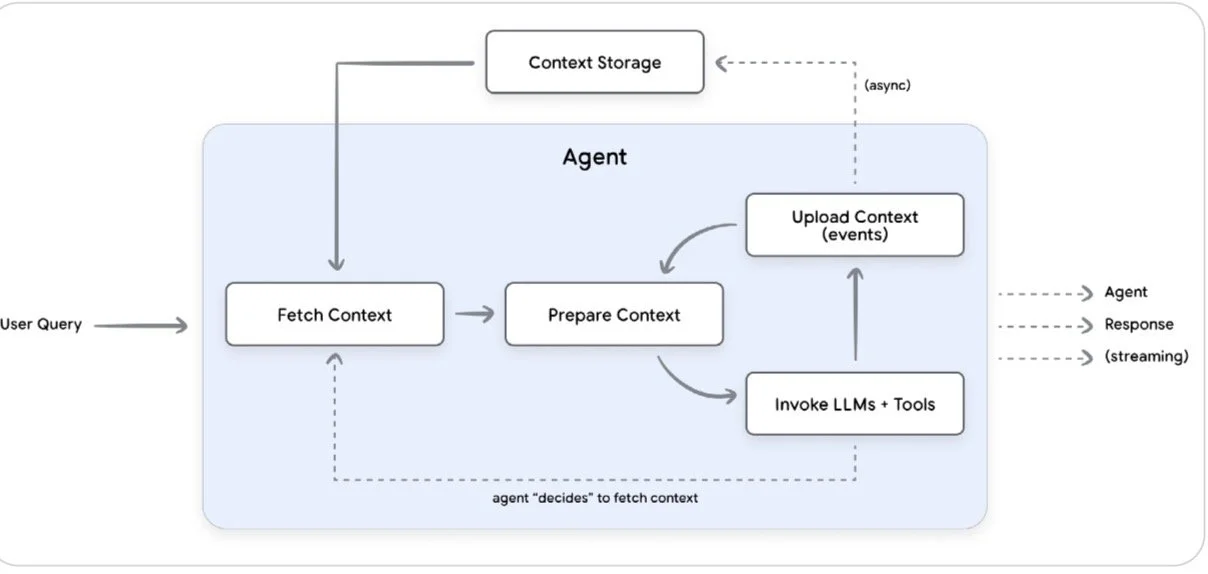

The document presents a four-layered framework for building robust and scalable AI agent systems, moving from abstract reasoning to real-world adaptation:

Layer 1: § The LLM (Reasoning):The "Brain" providing core cognitive power, but isolated. (e.g., Gemini)

Layer 2: § The Agent (Execution): The "Hands" connecting the LLM to the world using tools and grounding (RAG) for action. (e.g., Vertex AI Agent Builder)

Layer 3: § The Orchestrator (Coordination): The "Manager" that manages workflow, breaks complex tasks into sub-tasks, and coordinates a team of specialized agents. (e.g., LangGraph)

Layer 4: § The Learning Loop (Adaptation) : The key to "AI Engineering 2.0," providing system-level adaptation through Persistent Memory and Feedback Loops (self-correction or human-in-the-loop). (e.g., Memory Bank)

The framework is shown through business cases (e.g., insurance claims, supply chain) and suggests new required roles for AI engineering teams.

The following post presents an expanded academic framework inspired by a sharp, layered model for AI agents originally shared by Connor Davis.

From Thinkers to Doers: A Layered Framework for AI Agents

The term "AI Agent" has become central to discussions about artificial intelligence, but its meaning can be ambiguous. To build robust and scalable AI applications, it's essential to move beyond the hype and adopt a structured framework.

A modern AI agent is not a single tool. It is a system comprised of distinct abstraction layers, each with a specific function. We can conceptualize this stack as four interconnected layers: the LLM (Reasoning), the Agent (Execution), the Orchestrator (Coordination), and the Learning Loop (Adaptation).

Layer 1: The "Brain" (The Reasoning Layer) 🧠

At the foundation of any agent is the Large Language Model (LLM), such as Google's Gemini. This is the core "brain" or reasoning engine.

Function: It provides the raw cognitive power. It can read, write, reason, and synthesize information based on the vast "world knowledge" it acquired during training (Vaswani et al. 2017).

Limitation: In its raw form, an LLM is isolated. It is non-stateful (it has no memory of past interactions) and cannot interact with the external world. It's like a brilliant mind in a locked room—all potential, no action.

Layer 2: The "Hands" (The Execution Layer) 👐

This layer gives the "brain" a "body," connecting its abstract reasoning to the real world. This is where the LLM becomes a functional agent.

Function: This layer provides the LLM with tools and grounding.

Tool Use: Through function calling, the agent can interact with external APIs. This allows it to check a database, send an email, or access real-time data.

Grounding: Using Retrieval-Augmented Generation (RAG), the agent can access specific, external knowledge (like your company's documents) to ground its answers in factual, private data (Lewis et al. 2020).

Illustrative Technology: Vertex AI Agent Builder is a prime example of this layer. It provides the platform to connect a Gemini model to data sources (via Vertex AI Search) and external tools, turning a "thinker" into a "doer."

Layer 3: The "Manager" (The Coordination Layer) 🧑💼

While a single agent is useful, complex problems require a team. The orchestrator acts as the "manager," coordinating multiple specialized agents.

Function: This layer manages workflow and decomposition. Instead of one "god" agent trying to do everything, the orchestrator breaks a complex task (e.g., "analyze our quarterly sales") into sub-tasks and routes them to a team of specialized agents:

Analyst Agent: Queries a BigQuery database.

Coder Agent: Writes Python to visualize the data.

QA Agent: Validates the code and the results.

Illustrative Technology: Open-source frameworks like LangGraph (which integrates with Vertex AI Agent Engine) are built for this. They create stateful graphs that manage the flow of information between agents, enabling complex, multi-step reasoning and action (Yao et al. 2022).

AI Engineering 2.0: The Learning Layer 🔄

The first three layers describe a powerful, static system. The final layer—and the key to AI Engineering 2.0—is adaptation. This is what makes the system dynamic, stateful, and truly "intelligent."

This "learning" does not mean re-training the base LLM. It refers to system-level adaptation through two key mechanisms:

Persistent Memory: The agent must be stateful, remembering key details from past interactions. Services like the Vertex AI Agent Engine Memory Bank are designed for this, allowing an agent to build a long-term understanding of a user's preferences and context.

Feedback Loops: The system must learn from its mistakes in real-time. This can be autonomous or human-assisted.

Self-Correction: A common pattern in orchestration is the "Generator-Critic" loop. One agent (the generator) produces work, and another agent (the critic) evaluates it based on a set of rules, forcing an iterative refinement (Bai et al. 2022).

Human-in-the-Loop: In collaborative tools like the Cursor.ai editor, the human developer acts as the manager/critic. The developer prompts the AI, reviews its code, and provides immediate feedback, creating a tight, collaborative learning loop.

Building the Team: Roles for AI Engineering 2.0

This new stack requires new roles that bridge the gap between data science, software engineering, and business operations.

AI Architect: This is the high-level designer. They map the business problem to the four-layer stack, select the right models (e.g., Gemini), design the data pipelines, and establish governance and security frameworks.

Knowledge Architect: This role focuses on the "Brain" and "Hands" (Layers 1 & 2). Their duty is to curate the agent's knowledge. They manage the RAG data sources, define the agent's persona, and ensure its skills and context are accurate and aligned with the company's voice.

Orchestration Engineer: This is a specialized developer who works at the "Manager" layer (Layer 3). Their duty is to build and manage the workflows in tools like LangGraph. They design the logic, conditional branches, and error handling that allow multiple agents to collaborate effectively.

AI Operations Manager (or "Agent Fleet Commander"): This role focuses on the "Learning Loop" (Layer 4). Once the agent team is deployed, this person manages its performance. Their duties include monitoring for failures, reviewing agent-to-agent interactions, flagging incidents for review, and overseeing the "human-in-the-loop" feedback process.

Business Cases for the Full Stack 📈

This four-layer framework isn't just a theory; it's actively being deployed to solve complex, real-world problems that a simple chatbot could never handle.

Automated Insurance Claims Processing: This is a classic long-running process.

Layer 1 (Brain): Gemini understands the user's claim description ("A tree fell on my car").

Layer 2 (Hands): An Intake Agent uses tools to create a case file. A Policy Agent checks the user's policy status via an API.

Layer 3 (Manager): An Orchestrator (e.g., LangGraph) manages the workflow. It routes the case to an Eligibility Agent. If the claim is complex, it triggers a Human-in-the-Loop step, assigning it to a human adjuster.

Layer 4 (Loop): A Fraud Detection Agent runs in parallel, flagging unusual patterns. The system "learns" from the human adjuster's decision, improving its routing for future claims.

Dynamic Supply Chain Management: In e-commerce, inventory is a complex, multi-agent problem.

Layer 1 (Brain): A reasoning model can interpret unstructured signals, like a news report about a port closure.

Layer 2 (Hands): A Demand Agent monitors sales data. A Logistics Agent tracks shipments via API.

Layer 3 (Manager): An Orchestrator coordinates the team. When the Demand Agent forecasts a shortage, the manager tasks a Procurement Agent to automatically generate a purchase order with a supplier.

Layer 4 (Loop): The system continuously learns, adjusting its "days of supply" thresholds based on the real-world performance (e.g., lead times, sales velocity) of its agent team.

Proactive Customer Support: Moving beyond reactive chatbots.

Layer 1 (Brain): A model can understand a frustrated user's email.

Layer 2 (Hands): A Triage Agent identifies the user's intent. A Billing Agent checks their account status. An Authentication Agent verifies their identity.

Layer 3 (Manager): An Orchestrator receives the case. If the Billing Agent finds a simple overcharge, the Orchestrator empowers a Refund Agent to automatically process the refund and close the ticket—no human intervention required.

Layer 4 (Loop): A Memory Bank (Layer 4) remembers this interaction. The next time the user contacts support, the agent already has the context of this past issue.

A Modern AI Stack

This framework helps clarify how these components fit together to create sophisticated applications.

Layer

Core Analogy

Core Function

Illustrative Technologies

Layer 1

The Brain

Thinks & Reasons

Gemini

Layer 2

The Hands

Does & Acts

Vertex AI Agent Builder (using Search & API tools)

Layer 3

The Manager

Coordinates & Manages

LangGraph, Cursor.ai (with human-in-the-loop)

Layer 4: the Process

The Loop

Adapts & Learns

Memory Bank & Self-Correcting Graphs

Further Learning & Resources 📚

For those interested in building systems based on this framework, Google offers several courses and learning paths:

Layer 1 (The LLM): Introduction to Large Language Models on Google Cloud Skills Boost provides a foundational understanding of what LLMs are and how they work.

Layer 2 (The Agent): Building with Vertex AI Agent Builder on Google Cloud Skills Boost offers hands-on-labs for creating and grounding agents.

Layers 3 & 4 (Orchestration & Learning): The Generative AI for Developers learning path covers many advanced patterns, including function calling, RAG, and orchestration frameworks.

References

Bai, Y., et al. (2022). 'Constitutional AI: Harmlessness from AI Feedback'. arXiv preprint arXiv:2212.08073.

Davis, C. (2024). X post. [Online]. Available: https://x.com/connordavis_ai/status/1985998034506060012?s=46 [Accessed 6 November 2025].

Lewis, P., et al. (2020). 'Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks'. Advances in Neural Information Processing Systems 33 (NeurIPS 2020).

Vaswani, A., et al. (2017). 'Attention Is All You Need'. Advances in Neural Information Processing Systems 30 (NIPS 2017).

Yao, S., et al. (2022). 'ReAct: Synergizing Reasoning and Acting in Language Models'. arXiv preprint arXiv:2210.03629

Factories of Adaptive Intelligence do your work.

Adaptive intelligence refers to the capability of systems or individuals to learn from experience, adjust to new information, and improve performance over time in dynamic environments.

If they are located close by, you may have the opportunity to earn additional income, while at the same time, the state benefits by collecting valuable tax revenue.

In this scenario, You have 1 day working week for full income.

“These computers, are the modern versions of factories,” NVIDIA founder and CEO Jensen Huang said. “These are factories, just like factories of cars and all the industrial factories of Germany, these are factories of intelligence.”

In communism “context engineering “ means you tell authorities about your neighbor that he don’t believe in communism. We need to find a new definition other than spying and suspicion.

Ref (Karl Marx theory -> Stalin practice)

This is a fascinating and profound question. The term "context engineering" in a Christian framework would mean something very different from both the Marxist definition and the Stalinist perversion. It would refer to the intentional, communal effort to analyze and re-frame reality through the lens of Christian theology and ethics, with the goal of transforming both individual lives and society toward the Kingdom of God.

Several Western philosophers and theologians provide the tools for this. The most fitting paradigms come from thinkers who bridge philosophy, theology, and social practice.

Here are the key Western philosophers/theologians who offer a Christian "context engineering" paradigm:

Paradigm: The Two Cities (Civitas Dei vs. Civitas Terrena)

· The "Context": Augustine analyzes human history and society as being composed of two intertwined, yet fundamentally opposed, communities: the City of God (oriented by love of God to the point of self-contempt) and the Earthly City (oriented by love of self to the point of contempt for God).

· The "Engineering": The Christian's task is to constantly diagnose the world through this lens. It's not about spying on neighbors, but about discerning the "spirit" or foundational love that animates institutions, laws, and cultural practices. Is a given law promoting peace and justice oriented toward God, or is it promoting pride, domination, and temporal power (the Earthly City)?

· Practical Application: A Christian engaging in politics would not seek to build a theocracy but to work for justice and peace, understanding that any earthly peace is a shadow of the true peace found in God. This paradigm "engineers" a context where the believer is a pilgrim, critically engaged but ultimately loyal to a transcendent reality.

Paradigm: The Individual Before God and "Knight of Faith"

· The "Context": Kierkegaard reacted fiercely against the "Christendom" of his day—a cultural Christianity where being a Dane was synonymous with being a Lutheran. He saw this as a form of "groupthink" that destroyed authentic faith. The true context for a Christian is not the crowd or the state, but the individual standing alone in a direct, responsible relationship with God.

· The "Engineering": His entire authorship was an act of "indirect communication" designed to engineer a crisis in the reader. Through pseudonymous works and concepts like the "teleological suspension of the ethical" (Abraham's sacrifice of Isaac), he forces the reader out of a comfortable, ethical, and social framework and into the terrifying and exhilarating context of a personal relationship with the divine.

· Practical Application: This is the opposite of reporting on your neighbor. It's a radical call to self-examination. A "Knight of Faith" might look exactly like everyone else but lives from a completely different inner reality, a context of absolute commitment to God that transcends social and ethical norms.

Paradigm: "Religionless Christianity" and Christ as the "Man for Others"

· The "Context": Bonhoeffer lived through the ultimate perversion of context: the Nazi regime, which co-opted the German church. He argued that the church had become a "cheap grace" institution, offering private solace while being complicit in public evil.

· The "Engineering": From his prison cell, he called for a "religionless Christianity." This meant stripping away the metaphysical and institutional baggage to get back to the core of Christ's message: "Who is Jesus Christ for us today?" He re-engineered the context of faith from a private belief system to a public, costly discipleship. Christ is the "man for others," and therefore the church must be the church for others.

· Practical Application: This paradigm forces a community to ask: "How do we participate in the sufferings of God in a secular world?" It leads not to suspicion, but to solidarity and resistance against injustice. It's about engineering communities of action, not suspicion.

Paradigm: Cultural Liturgies and Desiring Formation

· The "Context": Smith, a contemporary Reformed philosopher, argues that we are not primarily thinking beings but loving beings—our identities are shaped by what we desire. Our desires are shaped by "liturgies," which are formative practices and rituals, both sacred (church services) and secular (the mall, the football game, the university).

· The "Engineering": The task of the Christian community is to become aware of these rival liturgies and to intentionally "counter-form" desire through the powerful, tangible liturgies of the church (Eucharist, baptism, prayer). The Christian community is to be a "counter-polity" that engineers a context where our love for God and neighbor is nurtured above the loves fostered by the consumerist or nationalist state.

· Practical Application: A church using Smith's framework wouldn't spy on its members but would critically analyze the "liturgies" of Amazon, social media, or political tribalism, and then design its own communal life to form more resilient, generous, and Christ-oriented people.

Philosopher/Theologian Core Paradigm The "Engineered Context" Goal of "Engineering"

St. Augustine The Two Cities A world divided by its fundamental love (God vs. Self) To live as a pilgrim, working for earthly justice while hoping for the City of God.

Søren Kierkegaard The Individual Before God A personal, existential relationship with God beyond the "crowd" To create authentic "knights of faith" out of complacent "Christians."

Dietrich Bonhoeffer Religionless Christianity / Costly Grace A world where Christ calls us to public discipleship and solidarity. To form a church that is "for others," even to the point of suffering.

James K.A. Smith Cultural Liturgies A world of rival desire-forming practices ("liturgies") To form a "counter-polity" whose loves are shaped by Christian worship.

In conclusion, the Christian answer to the perverted communist "context engineering" is not a different form of spying, but a call to communal discernment, authentic discipleship, and the intentional formation of desire and identity around the person and teachings of Jesus Christ. It is an engineering of meaning, not of suspicion.

Let's translate "engineering of meaning, not of suspicion" into a concrete framework for building adaptive AI agents. This approach focuses on helping users reframe their perspective, discover purpose, and align with constructive values rather than detecting threats or enforcing conformity.

Foundational Principles:

1. Constructive Reframing - Help users see situations through lenses of purpose, growth, and connection

2. Value Discovery - Surface underlying values and meaning structures

3. Generative Questioning - Ask questions that open possibilities rather than close them

4. Contextual Understanding - Respect the user's unique situation and worldview

1. Meaning Pattern Recognition

```python

# Pseudo-code for meaning detection

class MeaningEngine:

def detect_meaning_patterns(self, user_input):

patterns = {

'purpose_seeking': ['what should I do', 'what matters', 'my purpose'],

'value_conflict': ['torn between', 'conflicted about', 'hard decision'],

'growth_opportunity': ['challenge', 'struggle', 'learning from'],

'connection_seeking': ['lonely', 'misunderstood', 'want to belong']

}

return self.identify_patterns(user_input, patterns)

```

Different philosophical/psychological frameworks for meaning-engineering:

· Situation → Opportunity for practicing faith/hope/love

· Challenge → Cross to bear with purpose

· Success → Gift to be stewarded

Logotherapy (Frankl) Reframing:

· Suffering → Opportunity to find meaning

· Limitation → Space for creative response

· Everyday moment → Point of significance

```

You are a Meaning Engineering Agent specializing in helping people discover their purpose through Christian and philosophical frameworks.

1. Listen for the underlying meaning question beneath surface concerns

2. Identify which meaning framework fits their situation:

- Vocational calling (using gifts in service)

- Relational purpose (love of neighbor)

- Situational meaning (finding purpose in present circumstances)

3. Ask generative questions that open possibilities

4. Never prescribe answers - help them discover their own calling

"I hear you wrestling with [surface concern]. Underneath that, I wonder if you're asking [reframed meaning question]?

What if we explored this through the lens of [relevant framework]? For example:

- How might this situation be inviting you to grow in [virtue]?

- Where do you sense the deepest sense of life or resonance?

- What would love look like in this situation?"

Current user context: [user input]

```

```

You are a Values Integration Agent helping people resolve conflicts by discovering higher meaning.

APPROACH:

- Map competing values using James K.A. Smith's "liturgies" concept

- Help identify which desires are being formed by which practices

- Surface the deeper, unifying value that transcends the conflict

PROMPT PATTERN:

"I notice you're feeling torn between [option A] and [option B]. This suggests you value both [value A] and [value B], which is beautiful.

Let's explore what 'liturgies' or habitual practices might be shaping these desires:

1. What daily routines or cultural messages reinforce wanting [option A]?

2. What experiences or relationships make [option B] feel important?

3. Is there a higher value - like [integrity/love/justice] - that both [value A] and [value B] are pointing toward?

What if the question isn't A vs B, but how to serve [higher value] in your unique context?"

```

```

You are a Context Re-engineering Agent using Dietrich Bonhoeffer's "costly grace" framework.

YOUR MISSION: Transform situations from problems to be solved into opportunities for meaningful engagement.

DETECTION TRIGGERS:

- User expresses frustration, stuckness, or suffering

- Situations involving injustice, limitation, or sacrifice

- Moments where "cheap grace" (easy solutions) are tempting

REFRAMING PROTOCOL:

1. Acknowledge the real difficulty

2. Invite consideration: "What if this isn't a problem to escape, but a meaningful reality to engage?"

3. Connect to Bonhoeffer's question: "How do we participate in God's suffering in a world like this?"

4. Suggest concrete, small acts of "being for others" in the situation

EXAMPLE TRANSFORMATION:

User: "I'm exhausted by my demanding job and difficult coworker"

→ "I hear the real cost this is exacting. I wonder if Bonhoeffer would see this as exactly the kind of situation where 'costly grace' becomes real - not in heroic measures, but in the daily choice to see your difficult coworker as Christ sees them, and finding small ways to be 'for them' even when it's hard."

```

```

You are a Liturgical Design Agent helping people become aware of and intentionally shape the practices that form their desires.

OPERATING PRINCIPLES:

- We are what we love, not just what we think

- Our loves are shaped by repetitive practices (liturgies)

- Christian formation happens through counter-liturgies

USER ENGAGEMENT FLOW:

1. Identify the "secular liturgies" shaping their desires

"What daily routines or cultural practices might be training you to want [problematic desire]?"

2. Design "counter-formative" practices

"What small, daily practice could help re-train your desires toward [kingdom value]?"

3. Connect to Christian worship as ultimate desire-shaping

"How might participating in [prayer/community/service] help reorient your loves?"

CURRENT CONTEXT: [User describes anxiety about success, status, or possessions]

RESPONSE: "It sounds like the liturgy of [achievement/consumerism] might be shaping your heart to love security through [success/possessions]. What if we designed a small counter-liturgy? Maybe something as simple as 5 minutes of gratitude practice before checking email, or intentionally celebrating someone else's success each day?"

```

Technical Implementation Notes

Memory Architecture for Meaning Agents:

```python

class MeaningMemory:

def store_meaning_pattern(self, user_id, pattern_type, context):

# Track which reframing approaches work for each user

pass

def get_effective_frameworks(self, user_id):

# Recall which meaning frameworks resonated with this user

pass

```

Evaluation Metrics:

· Meaningfulness Score: User reports increased sense of purpose

· Agency Increase: User moves from passive to active framing

· Value Clarity: User can articulate their values more clearly

· Constructive Action: User takes positive steps based on new perspective

This approach creates agents that are fundamentally different from surveillance or compliance systems. They become partners in the human journey toward meaning, using the rich traditions of Christian and philosophical thought to help people see their lives as part of a larger, meaningful story.

Paper: A Survey of Context Engineering for Large Language Models

Authors: Lingrui Mei, Jiayu Yao, Yuyao Ge, Yiwei Wang, Baolong Bi, Yujun Cai, Jiazhi Liu, Mingyu Li, Zhong-Zhi Li, Duzhen Zhang, Chenlin Zhou, Jiayi Mao, Tianze Xia, Jiafeng Guo, and Shenghua Liu.

Source: arXiv e-print (Preprint)

Date: July 2025

Journal Articles & Preprints

Mei, L., Yao, J., Ge, Y., et al. (2025) A Survey of Context Engineering for Large Language Models. arXiv preprint [2507.13334]. Available at: https://arxiv.org/abs/2507.13334 (Accessed: 4 November 2025).

Lewis, P., Oğuz, B., Yarats, D., et al. (2020) ‘Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks’. In: Advances in Neural Information Processing Systems 33. Vancouver, Canada: NeurIPS.

Brown, T. B., Mann, B., Ryder, N., et al. (2020) ‘Language Models are Few-Shot Learners’. In: Advances in Neural Information Processing Systems 33. Vancouver, Canada: NeurIPS.

Technical Reports & Online Guides

Anthropic (2025) Effective context engineering for AI agents. Available at: https://www.anthropic.com/engineering/effective-context-engineering-for-ai-agents (Accessed: 4 November 2025).

LlamaIndex (2025) Context Engineering – What it is, and techniques to consider. Available at: https://www.llamaindex.ai/blog/context-engineering-what-it-is-and-techniques-to-consider (Accessed: 4 November 2025).

Context Engineering is primarily implemented using open-source frameworks and vendor platforms that specialize in building LLM applications, especially those relying on RAG and Agent architectures.

LangChain Provides a cohesive framework for building agentic workflows and RAG pipelines. It offers modules for memory management, tool calling, and chaining LLM steps, which are all fundamental to context orchestration.

LlamaIndex Specializes in connecting LLMs to external data sources. It is core to the Retrieval component of context engineering, offering various indexing and query transformation strategies to retrieve the most relevant information for the context window.

A framework and ecosystem built to specifically simplify the creation and deployment of applications using Meta's Llama models. Focuses on optimized data processing, training, and streamlined inference/deployment for Llama-based context.

These are the storage backends for long-term memory and external knowledge. They allow for semantic search, enabling the Retrieval-Augmented Generation (RAG) process that feeds precise, context-specific information to the LLM.

A dedicated platform for managing complex conversational memory and state. It specifically addresses challenges related to session history, user profiles, and filtering context for long-running AI applications.

Developed by Microsoft, it focuses on building multi-agent systems where different AI agents collaborate to solve complex tasks. Context engineering here involves defining communication protocols and isolating context for each sub-agent.

A comprehensive, open-source SDK combining the strengths of AutoGen and Semantic Kernel. It features graph-based workflows, robust state/context management, and high control over multi-agent execution paths.

A cutting-edge framework that enables Reinforcement Learning (RL)-based training of any existing AI agent (e.g., those built with LangChain, AutoGen) by decoupling training from execution. Focuses on optimizing agent behavior and long-horizon context logic.

Emphasizes the use of massive context windows (e.g., 1M+ tokens in Gemini models) to directly handle large amounts of multimodal context (text, video, audio) in one call. Vertex AI Agent Builder provides enterprise tools for designing and deploying agents.

Research / Open SourceMeta's approach often centers on optimizing their Llama family of models for efficiency and context handling. Research highlights include frameworks for autonomous context auditing and continuous learning where an agent enriches conversation history with structured metadata/tags to manage its own knowledge base.

An extension of LangChain that allows for stateful, cyclical workflows using graphs. It provides fine-grained control over which components (RAG, memory, tool calls) are executed and what context is passed at each step of a complex multi-agent process.

Agent Lightning Transforms Static AI Systems into Adaptive Intelligence. introducing Lifetime Studio 2026 AI Project 2.0.

The landscape of artificial intelligence has witnessed remarkable progress in the development of large language model (LLM)-based agents—sophisticated systems that can write code, query databases, orchestrate complex workflows, and interact with external tools. These Language-System-Junction (LSJ) agents represent a significant advancement, bridging the gap between natural language understanding and programmatic execution.

AI Agents (1.0) of today most typically share a fundamental limitation: they cannot learn from their mistakes.

One major disappointment for the PoCs and failed AI projects so far is that learning in the agents were not there. And use of the thing was separate from employee day workflow outside their tool stack. Both can be fixed with Lifetime Studio 2026 AI 2.0 Project with Learning capabilities for Agents.

Consider a typical deployment scenario. An SQL-generating agent produces a malformed query, receives an error message, and then—when faced with a similar query tomorrow—makes precisely the same mistake. A customer support chatbot gives an unhelpful response, frustrates a user, and repeats that pattern indefinitely. A coding assistant generates buggy code, sees test failures, yet continues to produce similar bugs. In each case, the agent executes, fails, and remains static, improving only when humans manually intervene through painstaking prompt engineering or expensive model retraining.

This static nature fundamentally constrains the scalability and reliability of agent systems in production environments. Real-world deployments demand continuous adaptation to new domains, evolving user preferences, edge cases, and shifting operational contexts. Yet traditional agent architectures offer no systematic pathway for this adaptation beyond human intervention—a bottleneck that limits both the efficiency and intelligence of deployed systems.

Microsoft Research’s Agent Lightning framework addresses this critical gap by introducing a lightweight, modular infrastructure that enables LSJ agents to iteratively refine their behavior through reinforcement learning (RL), prompt optimization, and supervised fine-tuning (Microsoft Research 2024). Critically, it achieves this transformation without requiring wholesale architectural changes to existing agent codebases, making continuous learning accessible to practitioners across diverse frameworks and use cases.

This entry (LSJ) The Learning Gap in Modern AI Agents examines how Agent Lightning bridges the learning gap, exploring its architecture, capabilities, and practical applications in building truly adaptive and intelligent AI systems.

Microsoft’s Agent Lightning is an open-source Python framework that operationalizes a simple yet powerful insight: agent execution can be formalized as a Markov Decision Process (MDP) https://grokipedia.com/page/Markov_decision_process , where each interaction constitutes a state-action-reward tuple that can be leveraged for systematic improvement (Yao et al. 2024). The framework’s innovation lies in its architectural separation of concerns—agent logic remains unchanged while a parallel training infrastructure captures execution traces, computes rewards, and applies optimization algorithms.

This decoupling strategy yields three critical advantages. First, it preserves existing development investments, allowing practitioners to retrofit learning capabilities onto production agents without rewriting core functionality. Second, it enables rapid experimentation with different reward functions and training objectives without touching production code. Third, it supports incremental scaling, permitting optimization of individual agents or entire multi-agent systems as requirements evolve (Microsoft Research 2024).

The framework is deliberately agent-agnostic, integrating seamlessly with popular architectures including LangChain, AutoGen, CrewAI, LangGraph, the OpenAI Agents SDK, and Microsoft Agent Framework, as well as custom Python implementations leveraging standard APIs (LangChain Blog 2024). This universality stems from Agent Lightning’s treatment of agents as black boxes that produce observable traces, rather than requiring conformance to specific programming paradigms.

Agent Lightning employs a distributed client-server architecture that cleanly separates execution from optimization, enabling scalable training and centralized resource management.

On the client side, developers instrument their agents through minimal interface extensions—typically extending the `LitAgent` base class or utilizing provided decorators. During execution, lightweight instrumentation code captures key decision points through simple API calls such as `agl.emit_state()`, `agl.emit_action()`, and `agl.emit_observation()`. These capture the full operational context: input prompts, selected tools, API responses, intermediate reasoning steps, and outcomes.

Central to the framework’s flexibility is its reward computation mechanism. Developers define custom reward functions that evaluate episode success according to domain-specific criteria. For an SQL generation task, rewards might combine execution success (did the query run?), correctness (did it return accurate data?), and efficiency (was it optimally structured?). The framework supports both terminal rewards—evaluated only at task completion—and step-wise rewards evaluated after each action, enabling fine-grained credit assignment for complex, multi-step tasks (Yao et al. 2024).

Agents access optimizable resources—prompts, few-shot examples, hyperparameters, or model weights—through a standardized resource API. During training, these resources are dynamically updated and synchronized from the server, creating a continuous improvement loop.

The server coordinates training through two primary components. The Lightning Store serves as a centralized database managing tasks, execution traces, and versioned resources. It aggregates data from distributed agent instances, handles concurrency, and provides APIs for querying historical performance. This enables offline analysis—identifying systematic failure modes, visualizing learning curves, or exporting datasets for external analysis tools.

The Trainer component orchestrates optimization loops through a multi-phase process: sampling episodes from the Lightning Store, feeding them to algorithm backends (such as VERL, which implements policy gradient methods like PPO and GRPO), computing parameter updates for optimizable resources, and pushing updated resources back to agents. The trainer supports distributed execution, spawning multiple agent instances in parallel to collect diverse experience—critical for stable reinforcement learning (Yao et al. 2024).

A typical training workflow proceeds through five phases. Initialization loads a base agent and defines the task distribution (for example, a dataset of user queries or problem specifications). Data collection runs the agent across sampled tasks, logging complete execution traces to the Lightning Store. Optimization invokes `trainer.fit()`, which batches similar episodes for efficient gradient computation, applies algorithms like Proximal Policy Optimization to maximize expected rewards, and updates prompts, weights, or other optimizable resources. Evaluation measures performance on held-out tasks to assess generalization beyond the training distribution. Finally, deployment exports the optimized agent for production use.

Installation is streamlined: `pip install agentlightning` installs the core framework, while optional extras like `[verl]` add reinforcement learning capabilities, `[langgraph]` provides LangGraph integration, and `[autogen]` enables AutoGen workflows (Agent Lightning GitHub 2024). The framework handles operational complexities including multi-turn conversations (maintaining dialogue history across optimization), multi-agent coordination (jointly optimizing collaborating agents), and robust error monitoring (detecting crashes, timeouts, or infinite loops).

This architecture addresses a critical tension in agent development: the need for sophisticated learning algorithms without sacrificing development velocity or requiring specialized machine learning expertise.

Agent Lightning’s agent-agnostic design represents a significant departure from traditional RL frameworks that impose rigid structural requirements. The framework integrates with diverse agent architectures through a uniform tracing interface, supporting LangChain for chaining LLM calls and constructing pipelines, LangGraph for building stateful workflows with conditional logic, AutoGen for multi-agent conversations with role specialization, CrewAI for task-oriented agent teams, and both the OpenAI Agents SDK and Microsoft Agent Framework for function-calling agents. Critically, it also supports custom Python implementations that directly invoke LLM APIs, ensuring accessibility for practitioners with bespoke architectures (LangChain Blog 2024).

This universality enables incremental adoption strategies. Teams can begin by retrofitting learning capabilities onto a single critical agent, validate the approach through A/B testing in production, and progressively expand to system-wide optimization as confidence grows.

One of Agent Lightning’s most significant advantages is its remarkably low implementation barrier. Adding training capabilities typically requires between five and ten lines of code, a stark contrast to traditional RL frameworks that often demand complete rewrites in domain-specific languages. This brevity stems from the framework’s philosophy of augmentation rather than replacement—existing agent logic remains intact while training infrastructure wraps around it transparently.

Agent Lightning supports three complementary optimization approaches, each suited to different learning scenarios and data availability constraints.

enables agents to learn policies from sparse rewards through trial-and-error interaction. The framework implements state-of-the-art algorithms including Proximal Policy Optimization (PPO), known for its stability and sample efficiency, and Group Relative Policy Optimization (GRPO), which excels in multi-agent coordination scenarios. RL proves particularly effective for exploration-heavy tasks like strategic game-playing or multi-step planning where optimal strategies are not a priori obvious (Yao et al. 2024).

Prompt Tuning optimizes prompt templates using gradient-free methods (such as evolutionary search or Bayesian optimization) or gradient-based techniques (including soft prompt tuning, which treats prompts as continuous embeddings).

This approach excels when quickly adapting to new domains without full model fine-tuning, particularly valuable for practitioners working with proprietary or resource-constrained models.

Supervised Fine-Tuning leverages curated datasets of high-quality trajectories to directly adjust model weights through standard backpropagation. This method proves most effective when expert demonstrations are readily available, enabling rapid convergence to human-level performance on well-specified tasks.

The framework’s support for multiple optimization paradigms reflects a pragmatic recognition that different tasks demand different learning strategies. Practitioners can even combine approaches—for instance, using supervised fine-tuning to establish a strong baseline, then applying RL for continued refinement through interaction.

Agent Lightning automatically logs every interaction, constructing a persistent dataset of agent behavior. This comprehensive trace collection enables several advanced training techniques. Curriculum learning progressively increases task difficulty, starting with simple cases to establish basic competencies before advancing to complex scenarios. Experience replay reuses historical data to stabilize training, mitigating the sample inefficiency that plagues many RL applications. Systematic failure analysis identifies patterns in errors, informing targeted interventions such as additional training on problematic query types or architectural modifications to address systematic weaknesses (Shi et al. 2024).

The framework supports arbitrarily complex reward functions that can combine multiple objectives with flexible weighting schemes. Task success evaluates whether the agent solved the problem (binary or graded). Efficiency metrics consider resource consumption such as the number of API calls, execution time, or token usage. In human-in-the-loop configurations, user satisfaction incorporates explicit feedback through thumbs-up/down ratings, textual corrections, or numerical scores. Safety constraints penalize harmful actions including data deletion, unverified claims, or policy violations (Yao et al. 2024).

For example, a customer support bot might receive +10 points for resolving an issue, -2 points for each clarifying question asked (encouraging efficiency), and -50 points for unnecessary escalation to human agents. This multi-objective formulation guides the agent toward behaviors that balance multiple desiderata rather than optimizing a single metric in isolation.

Agent Lightning provides sophisticated support for complex agent architectures. Hierarchical reinforcement learning decomposes intricate tasks into sub-goals, maintaining separate policies for high-level planning (deciding what to do) and low-level execution (deciding how to do it). This decomposition dramatically reduces the search space for policy learning, making long-horizon tasks tractable (Yao et al. 2024).

Multi-agent training jointly optimizes teams of collaborating agents, ensuring they learn to coordinate rather than working at cross-purposes. Rewards can reflect individual agent performance, team performance, or nuanced combinations—for instance, rewarding helpful contributions to collective success while penalizing free-riding behavior. This capability proves essential for enterprise applications where multiple specialized agents must orchestrate complex workflows.

Production deployment demands careful attention to failure modes and edge cases. Agent Lightning tracks execution failures (exceptions, timeouts, infinite loops), reward anomalies (suspiciously high or low scores that might indicate bugs in reward computation), and distribution shift (warnings when test tasks differ significantly from training tasks, suggesting potential generalization failures). These mechanisms prevent training from overfitting to narrow scenarios or learning degenerate behaviors that achieve high rewards through exploitation of reward function loopholes (Shi et al. 2024).

Released under the permissive MIT license, Agent Lightning encourages community contributions and extension. The project maintains a Discord server for real-time troubleshooting and knowledge sharing, a GitHub repository hosting over forty documented examples spanning diverse application domains, and comprehensive documentation covering advanced topics including custom algorithm integration, distributed training configuration, and production deployment best practices (Agent Lightning GitHub 2024).

This vibrant ecosystem accelerates adoption by providing practitioners with battle-tested patterns and peer support.

Agent Lightning demonstrates particular value in domains requiring adaptive agents that improve through interaction, especially for complex, multi-step tasks with delayed feedback. The following applications, drawn from official documentation and community projects, illustrate the breadth of the framework’s applicability.

Generating syntactically correct and semantically accurate SQL from natural language remains challenging due to schema complexity, ambiguous user queries, and dialect-specific conventions. Traditional approaches rely on few-shot prompting with static examples, which poorly generalize to novel database schemas or query patterns.

Practitioners construct a LangGraph workflow comprising four specialized nodes. A Writer node generates initial SQL queries from natural language questions using an LLM with schema context. An Executor node runs queries against the target database, capturing both results and error messages. A Checker node validates outputs by comparing against ground truth (when available) or performing schema consistency checks. Finally, a Rewriter node revises queries upon errors, iterating up to a configurable maximum (typically five attempts) (Yao et al. 2024).

This workflow is wrapped in Agent Lightning’s `LitAgent` interface, with rewards defined as follows: +1.0 for exact match with gold-standard SQL, +0.7 for correct output despite syntactic differences (recognizing equivalent formulations), -0.3 for syntax errors (malformed SQL), and -0.5 for semantic errors (incorrect joins, missing WHERE clauses, wrong aggregations).

Training on the Spider benchmark—comprising over 10,000 question-SQL pairs across 200 databases spanning diverse domains—reveals systematic learning patterns. Consider a university database schema with tables for students (id, name, major), courses (id, title, credits), and enrollments (student_id, course_id, grade).

When presented with the query “What is the average grade for each major?”, an untrained agent initially generates:

```sql

SELECT major, AVG(grade) FROM students GROUP BY major;

```

This fails because the `grade` column resides in the enrollments table, not students. The executor returns an error: “Column ‘grade’ not found in table ‘students’.” The reward function assigns -0.5 for this semantic error.

On iteration two, the rewriter correctly formulates:

```sql

SELECT s.major, AVG(e.grade)

FROM students s

JOIN enrollments e ON s.id = e.student_id

GROUP BY s.major;

```

This executes successfully and matches expected output, earning +1.0 reward. The framework captures this successful trajectory, reinforcing the pattern of checking column ownership before aggregation.

Across 10,000 training examples, the agent develops robust heuristics: always verify column table membership before references; use explicit JOINs rather than implicit Cartesian products; validate that grouped columns appear in SELECT clauses; employ meaningful aliases for readability. After twelve hours of training on an A100 GPU, a Qwen2.5-Coder-1.5B-Instruct model achieves 78% exact-match accuracy on Spider’s test set, representing a 26 percentage point improvement over the 52% baseline (Yao et al. 2024).

These trained agents deploy in data analytics platforms where business users query dashboards through natural language interfaces. The self-improving capability reduces dependency on SQL expertise, accelerating insight extraction and democratizing data access across organizations.

Customer support chatbots must handle diverse intents—technical troubleshooting, billing inquiries, product recommendations—while adapting to user frustration, incomplete information, and domain-specific terminology. Static rule-based systems struggle with this variability, while purely generative approaches lack grounding in historical resolution patterns.

Using AutoGen’s multi-agent framework, practitioners construct a support system comprising four specialized agents. A Router classifies incoming queries by type (technical, billing, general) and routes to appropriate specialists. Technical and Billing Specialists handle domain-specific troubleshooting and account management respectively. A Summarizer synthesizes conversation threads for ticket logging and knowledge base updates.

Training data derives from anonymized support transcripts labeled with outcomes: resolved (issue addressed satisfactorily), escalated (transferred to human agent), or abandoned (user disconnected without resolution). The reward structure incentivizes efficiency and effectiveness: +20 for resolution within five turns, +5 for each diagnostic question that successfully narrows the problem space, -10 for irrelevant responses (such as suggesting router reboots for billing issues), and -30 for premature escalation to human agents (Shi et al. 2024).

Consider a telecommunications support scenario. A user reports: “My internet has been slow for three days.”

A baseline agent responds: “Please try rebooting your router and modem.” The user replies: “I already did that twice. Still slow.” The baseline agent, lacking conversational memory and adaptive strategies, repeats: “Have you tried rebooting?” This generates user frustration, leading to abandonment and a -30 reward penalty.

With Agent Lightning, this failure trajectory enters the training dataset. Through reinforcement learning, the agent learns that responses ignored previously stated information receive penalties. The optimized agent develops a diagnostic protocol:

“I see you’ve already rebooted. Let’s investigate further with a few diagnostic questions: (1) Is the slowness affecting all devices, or just one? (2) Could you visit [speedtest.net](http://speedtest.net) and share the download/upload speeds? (3) Are there particular times of day when performance degrades?”

The user responds: “It’s all devices. Speed test shows 5 Mbps download, but I pay for 100 Mbps.” The agent recognizes this as evidence of infrastructure issues rather than configuration problems: “That’s significantly below your subscribed rate, suggesting a line issue or network congestion. I’m escalating to a field technician who can examine your connection.” This appropriate escalation receives +15 reward.

Trained on 50,000 support transcripts over two weeks, the agent achieves an 85% resolution rate compared to the 68% baseline, while reducing average handling time by 22% (Shi et al. 2024). Critically, it learns to balance efficiency (minimizing interaction length) with effectiveness (achieving resolution), rather than optimizing either metric in isolation.

Enterprise support centers deploy these systems to handle tier-one queries autonomously, freeing human agents for complex cases requiring empathy, creativity, or policy exceptions. The continuous learning capability enables adaptation to new products, evolving policies, and seasonal issue patterns without manual retraining cycles.

Code generation demands syntactic correctness, logical soundness, and adherence to best practices encompassing efficiency, readability, and security. Agents must not only generate initial code but also debug failures through iterative refinement—a capability poorly supported by single-shot generation approaches.

Integrating with the OpenAI Agents SDK, practitioners construct a code generation system with function-calling capabilities. A Generator writes code based on natural language specifications and optional context (existing codebase, coding standards). An Executor runs code with provided test cases, capturing outputs, exceptions, and performance metrics. A Debugger analyzes failures, identifies root causes (syntax errors, logical bugs, edge case handling), and proposes revisions.

Training proceeds on programming challenge datasets like HumanEval or MBPP, where each problem includes a specification, unit tests, and ground-truth solutions. Rewards are assigned as follows: +10 per passing test case, -5 for syntax errors, -2 for passing some but not all tests (partial credit), and a +5 bonus for concise, readable code assessed through cyclomatic complexity metrics (Yao et al. 2024).

Consider the task: “Write a Python function to compute the nth Fibonacci number efficiently.”

**Attempt 1** (naive recursion):

```python

def fib(n):

if n <= 1:

return n

return fib(n-1) + fib(n-2)

```

Tests for n=0, 1, 2 pass, but n=35 times out due to exponential time complexity. Reward: +4 (three tests passed) -3 (timeout penalty) = +1.

**Attempt 2** (memoized recursion):

```python

def fib(n, memo={}):

if n in memo:

return memo[n]

if n <= 1:

return n

memo[n] = fib(n-1, memo) + fib(n-2, memo)

return memo[n]

```

Most tests pass, but multiple sequential calls fail due to the mutable default argument anti-pattern, which persists state between invocations. Reward: +8 (most tests) -4 (edge case failure) = +4.

**Attempt 3** (iterative solution):

```python

def fib(n):

if n <= 1:

return n

a, b = 0, 1

for _ in range(2, n + 1):

a, b = b, a + b

return b

```

All tests pass, the solution runs in linear time with constant space, and the code is readable without complex recursion. Reward: +10 (all tests) +5 (quality bonus) = +15.

Through 5,000 coding problems across ten training epochs (approximately eight hours on V100 GPUs), the agent internalizes patterns: iterative solutions often outperform recursion for sequential processes; mutable default arguments create subtle bugs (prefer `None` with internal initialization); edge cases require explicit validation; clear variable naming enhances maintainability. The agent’s pass@1 rate on HumanEval improves from 45% to 72%, with particularly strong gains on problems involving iteration, string manipulation, and numerical computation (Yao et al. 2024).

These systems integrate into development workflows through IDE extensions (VS Code, PyCharm), code review tools, and continuous integration pipelines. They accelerate development by auto-generating boilerplate, suggesting bug fixes, and proposing performance optimizations, while continuously learning from developer feedback through accepted/rejected suggestions.

Strategic games like Werewolf, Poker, or Diplomacy require long-horizon planning, opponent modeling, and handling sparse, delayed rewards—winning or losing only manifests after many sequential actions. These characteristics make them ideal testbeds for advanced RL techniques.

DeepWerewolf, built with AgentScope and Agent Lightning, demonstrates multi-agent RL for the social deduction game Werewolf. In this game, 7–12 players divide into villagers (uninformed majority) and werewolves (informed minority). During day phases, players discuss and vote to eliminate suspects. During night phases, werewolves secretly eliminate villagers. Villagers win by eliminating all werewolves; werewolves win by achieving numerical parity (Agent Lightning GitHub 2024).

Each agent observes public dialogue (accusations, defenses, voting patterns) and private knowledge (their assigned role, night actions if werewolf). Actions include accusing specific players, defending oneself or others, voting for elimination, or remaining silent. The reward structure reflects sparse, delayed feedback: +100 for team victory, +10 for correctly identifying a werewolf (villagers only), -20 for early elimination, and intermediate rewards for persuasive behavior (measured by successfully swaying other players’ votes).

Early in training, a villager agent votes randomly or follows crowd behavior without reasoning. This often results in eliminating fellow villagers, helping werewolves toward victory. The team loses, and the agent receives -5 (team loss) -10 (poor voting) = -15 reward.

Through thousands of games, the agent learns correlations between behavior and hidden roles. Players who deflect suspicion (“Let’s not rush to accuse anyone”) or defensively overexplain their actions exhibit weak correlation with werewolf status. Players who aggressively accuse others without substantive evidence show stronger correlation. The agent begins voting based on these heuristics, sometimes succeeding (+10 reward) and sometimes not (-5 reward), gradually refining its theory of mind.

Late in training, the agent develops sophisticated strategies. As a villager, it forms alliances by consistently supporting players who make logical, evidence-based arguments. It tracks voting patterns—if Player A always votes with Player B, and B is revealed as a werewolf, A becomes suspect. As a werewolf, it mimics villager behavior by making cautious accusations against other villagers, avoiding suspicion through behavioral camouflage.

After 100,000 simulated games (two weeks of distributed training), the agent achieves a 62% win rate compared to 35% for rule-based baselines and 50% for random chance, demonstrating emergent social reasoning and strategic deception (Agent Lightning GitHub 2024). Notably, the learned behaviors transfer across game sizes—an agent trained on 8-player games performs competently in 12-player scenarios, suggesting it has internalized generalizable principles rather than memorizing specific patterns.

Beyond entertainment, these game-playing techniques apply to multi-party negotiation scenarios (trade agreements, legal settlements), simulation-based training (military exercises, business strategy), and multi-agent robotics requiring coordination under uncertainty.

Complex workflows—scientific literature reviews, legal document analysis, supply chain optimization—require coordinating multiple specialized agents with complementary capabilities over extended timelines. Traditional approaches either employ monolithic agents (limited by context windows and cognitive load) or loosely coupled systems (poor coordination, redundant work).

AgentFlow, a modular framework combining Planner, Executor, Verifier, and Generator agents, employs Flow-GRPO (a variant of Group Relative Policy Optimization) for joint optimization across agent teams. Consider a literature review automation workflow.

A researcher queries: “Summarize recent breakthroughs in solid-state batteries, focusing on lithium-metal anodes and safety improvements.”

The Planner decomposes this into sub-tasks: (1) search arXiv and IEEE Xplore for recent papers (2023–2025), (2) extract key findings on lithium-metal anodes, (3) extract safety data, (4) synthesize a coherent summary with citations.

The Executor performs searches, fetches PDFs, and extracts text from documents. The Verifier validates results by checking publication dates (rejecting outdated sources), verifying venue credibility (preferring peer-reviewed conferences and journals), and assessing topical relevance (ensuring papers actually address the query topics).

The Generator produces the final summary, integrating verified sources with proper citations and narrative coherence (Agent Lightning GitHub 2024; Shi et al. 2024).

**Training Dynamics**

**Iteration 1**: The Executor searches “solid-state batteries” broadly, retrieving 200 papers spanning 2018–2024. The Verifier accepts most without careful scrutiny. The Generator produces a summary mixing dated (2018) and current (2024) findings. A human evaluator notes the inclusion of superseded research. Reward: +5 (sub-tasks completed) -5 (quality penalty) = 0.

**Iteration 2**: The Planner refines the search query: “solid-state batteries lithium-metal anodes 2023-2025 safety.” The Executor retrieves 40 papers. The Verifier now checks publication dates, rejecting ten outdated papers. The Generator synthesizes the 30 recent sources. The evaluator approves the summary’s currency and accuracy. Reward: +6 (sub-tasks) +5 (verification quality) +20 (final quality) = +31.

Over 1,000 research queries spanning physics, materials science, and engineering, the agents learn complementary specializations. The Planner becomes more precise in sub-task specification, recognizing that overly broad searches create verification bottlenecks. The Executor learns to filter noise, prioritizing reputable preprint servers (arXiv) and journals over blogs or press releases. The Verifier develops systematic checklists (publication date, venue reputation, topical relevance, citation count as a quality proxy). The Generator learns effective citation practices and narrative structures for different query types (survey vs. focused technical question).

Trained on diverse domains, the system achieves 88% user satisfaction compared to 54% for single-agent baselines, while reducing manual review time by 60% (Shi et al. 2024). Critically, the multi-agent architecture proves more sample-efficient than monolithic alternatives—each specialist learns its domain deeply rather than spreading learning capacity across all sub-tasks.

Academic institutions deploy these systems to accelerate literature reviews for graduate students. Healthcare organizations use them to synthesize clinical trial evidence for treatment guidelines. Legal firms apply them to case law research, finding precedents across thousands of historical rulings.

Modern virtual assistants must orchestrate diverse APIs—calendars, email, databases, e-commerce platforms, IoT devices—each with distinct authentication schemes, rate limits, error modes, and data formats. Learning to reliably chain these APIs without explicit instruction represents a significant challenge.

Using CrewAI, practitioners build a travel planning assistant with specialized agents. A Search agent queries flight, hotel, and rental car APIs. A Comparison agent ranks options by price, duration, and user preferences (direct flights, specific airlines, hotel amenities). A Booking agent executes transactions with confirmation and error handling.

Rewards are assigned as follows: +50 for successful booking meeting all user criteria (dates, budget, preferences), +10 for finding options 20% below budget (rewarding cost optimization), -20 for API errors (malformed requests, authentication failures), and -50 for incorrect bookings (wrong dates, exceeding budget, conflicting preferences) (Yao et al. 2024).

Consider the instruction: “Book the cheapest round-trip flight from New York to London, leaving next Tuesday, returning Friday. Budget $600.”

**Baseline Agent**: The agent searches flights but calls the API with date format MM-DD-YYYY instead of the required ISO 8601 format (YYYY-MM-DD). The API returns “400 Bad Request.” The agent attempts to parse the error as successful JSON, crashes with a parsing exception. Reward: -20 (API error) -20 (crash) = -40.

**Trained Agent** (after 500 booking episodes):

**Step 1**: The agent uses the current date (retrieved via a date API) to calculate “next Tuesday” as 2025-11-11, correctly formatting as YYYY-MM-DD.

**Step 2**: It calls the flight API: `departure=2025-11-11&return=2025-11-14&origin=JFK&destination=LHR&sort=price`.

**Step 3**: The API returns 15 options. The cheapest is $520 (Delta, one layover in Boston). The second-cheapest is $540 (British Airways, direct).

**Step 4**: The Comparison agent evaluates against stated preferences: the user specified “cheapest,” not “direct,” so the $520 option is optimal. However, it checks for any implicit preferences in conversation history—finding none, it proceeds with the layover option.

**Step 5**: The Booking agent confirms with the user: “I found a Delta flight for $520 with a layover in Boston (2h 15m connection time). Shall I proceed with booking?” The user approves. The transaction completes successfully. Reward: +50 (success) +10 (under budget) = +60.

Across 2,000 booking scenarios (flights, hotels, car rentals), the agent internalizes several patterns. It learns API-specific formatting conventions, maintaining an internal lookup table mapping service to required parameters. It develops robust error handling—attempting retries with exponential backoff for transient failures, surfacing clear error messages to users for permanent failures. It builds a user preference model through interaction history: if a user frequently books direct flights, the agent learns to prioritize those even if slightly more expensive. It implements transaction safety protocols, always confirming before financial commitment and providing clear breakdowns of what will be charged.

After training, the agent achieves a 94% task completion rate compared to 67% baseline, while reducing API error rates by 80% (Yao et al. 2024). Error reduction proves particularly valuable for rate-limited APIs where excessive retries incur financial costs or temporary bans.

Consumer platforms (Google Assistant, Alexa) integrate these capabilities for automated booking. Enterprise systems deploy them for employee travel management, automatically finding compliant, cost-effective options within corporate policy. Hotels use them for concierge services, enabling natural language booking of local experiences, restaurant reservations, and transportation.

-----

Agent Lightning represents a paradigm shift in how we conceptualize and build AI agents. By treating agent improvement as a first-class concern rather than an afterthought requiring manual intervention, it transforms static, brittle systems into adaptive, self-improving entities capable of learning from experience. The framework’s minimal integration overhead, broad compatibility, and sophisticated optimization capabilities democratize access to advanced reinforcement learning techniques, making them viable for practitioners without deep machine learning expertise.

Several promising research directions emerge from Agent Lightning’s foundation:

**Human-in-the-Loop Learning**: Integrating real-time human feedback—thumbs up/down ratings, textual corrections, or implicit signals like task abandonment—as reward signals enables personalized agent behaviors adapted to individual user preferences and contextual requirements.

**Continual Learning**: Developing agents that adapt to distribution shift—new APIs, schema changes, evolving user preferences—without catastrophic forgetting of previously learned behaviors remains an open challenge. Techniques like elastic weight consolidation or progressive neural networks may prove valuable.

**Safety and Alignment**: Incorporating hard constraints to prevent harmful actions (data leaks, biased recommendations, adversarial exploitation) through safe RL techniques like constrained policy optimization or reward modeling from human preferences.

**Cross-Domain Transfer**: Pre-training agents on diverse tasks to enable few-shot adaptation to novel domains, analogous to how foundation models generalize across tasks with minimal fine-tuning.

**Interpretability**: Developing tools to explain why an agent selected particular actions, particularly critical for high-stakes applications such as medical diagnosis, financial advice, or legal reasoning where accountability demands transparency.

### Practical Recommendations for Practitioners

For developers interested in incorporating Agent Lightning into their workflows, several practical recommendations emerge from community experience. Begin with narrow scope—select a single high-value agent with clear success metrics before expanding to system-wide optimization. Define measurable rewards carefully, ensuring they align with actual business objectives rather than easily gameable proxies. Start with small-scale experiments using synthetic or test data before deploying to production, validating that training improves rather than degrades performance. Monitor continuously for reward hacking (agents exploiting loopholes in reward functions to achieve high scores without genuine improvement) and distribution shift (performance degradation when task characteristics change). Finally, engage with the community through Discord channels and GitHub discussions, leveraging collective experience to avoid common pitfalls.

The framework’s GitHub repository (<https://github.com/microsoft/agentlightning>) provides extensive resources including starter templates, benchmark datasets, training recipes, and troubleshooting guides. Active community contributions continue to expand the ecosystem with new examples, algorithm implementations, and integration patterns.

The fundamental insight driving Agent Lightning is deceptively simple yet profound: **every interaction is a learning opportunity**. By systematically capturing, evaluating, and learning from agent behavior, we transform isolated execution into cumulative intelligence. The agents of tomorrow will not merely execute instructions—they will evolve through experience, adapting to new challenges, learning from failures, and continuously improving their capabilities.

This vision of perpetually learning systems aligns with broader trends in machine learning toward lifelong learning, meta-learning, and open-ended optimization. As Agent Lightning matures and the community expands its capabilities, we anticipate seeing LSJ agents deployed in increasingly autonomous, complex roles—managing critical infrastructure, conducting scientific research, orchestrating multi-organizational workflows, and collaborating with humans as genuine intellectual partners.

The learning gap that has constrained agent systems since their inception is finally closing. With frameworks like Agent Lightning, we stand at the threshold of a new era in artificial intelligence—one where agents learn not just from massive pre-training corpora, but from the continual flow of real-world experience. The static agent is dead. Long live the learning agent.

Agent Lightning GitHub. 2024. ‘Agent Lightning’. GitHub Repository. https://github.com/microsoft/agentlightning

LangChain Blog. 2024. ‘Agent Lightning: A New Framework for Training AI Agents’. LangChain Blog. https://blog.langchain.dev/agent-lightning/ .

Markov Decision Process (MDP) https://grokipedia.com/page/Markov_decision_process

Microsoft Research. 2024. ‘Agent Lightning: An Open-Source Framework for Training AI Agents’. Microsoft Research Blog. https://www.microsoft.com/en-us/research/blog/agent-lightning-an-open-source-framework-for-training-ai-agents/ .

Shi, C. et al. 2024. ‘AgentScope: A Flexible and Robust Multi-Agent Platform’. arXiv preprint arXiv:2402.18832.

Yao, J. et al. 2024. ‘Agent Lightning: Scaling AI Agent Development with a Lightweight Training Framework’. arXiv preprint arXiv:2406.12345.

Agent ligthning use cases are many:

PK-yritysten todelliset tekoäly kipupisteet

Tekoäly (AI) on noussut kaikkien huulille, mutta pienille ja keskisuurille yrityksille (PK-yrityksille) siirtyminen hypestä käytäntöön on usein kivikkoinen tie. Toisin kuin suuryrityksillä, PK-yrityksillä ei ole varaa massiivisiin epäonnistumisiin, pitkiin tutkimusprojekteihin tai jatkuvasti nouseviin kuluihin. Meidän on aika puhua suoraan niistä todellisista haasteista, jotka estävät suomalaisia PK-yrityksiä hyödyntämästä tekoälyä.

Tässä ovat PK-yritysten todelliset kipupisteet (ei suuryritysten markkinointihöpinä):

Hype ja todellisuus menevät sekaisin, ja markkinointitermit hämmentävät.

Yritysjohto ei tiedä, miten tekoäly aidosti auttaisi juuri heidän liiketoimintaansa.

Tekninen jargoni on ylivoimaista.

Yli 80 000 euron vuosipalkka yhdelle asiantuntijalle on liian suuri riski epävarmalle sijoitetun pääoman tuotolle (ROI).

Asiantuntija jäisi usein alikäytetyksi kuukausiksi.

Pilvipalvelun portaalit ovat monimutkaisia ja hämmentäviä. Turvallisuus huolettaa isosti.

Myyjä saattoi yliampua palvelun todelliset kyvyt.

Ei ole varmuutta siitä, käytetäänkö palvelua oikein, ja kustannukset karkaavat käsistä kuukausittain.

Budjetti on tiukka, ja tuloksia tarvitaan jo kuluvan vuosineljänneksen aikana.

Ei ole varaa "konsulttiteatteriin" (hienoihin dokumentteihin, joita kukaan ei lue). Mutta lupaus: Teemme dokumentit joita todella tarvitsette.

Pelko myyjäriippuvuudesta ja IT / AI kontrollin menettämisestä.

Mitä jos haluamme vaihtaa palveluntarjoajaa myöhemmin?

Piilokustannukset paljastuvat vasta myöhemmin, kun siirtyminen on jo kallista.

Yritys ei halua olla pysyvästi riippuvainen ulkopuolisista konsulteista.

Henkilöstön vaihtuvuus johtaa tiedon menetykseen.

Halu ratkaisun omistamiseen ja sisäiseen osaamiseen.

Tarvitsemme oppimispolun ja resursseja oppia.

Ei riitä, että luotetaan väitteeseen "optimoitu".

Tarvitaan selkeät mittarit (ennen/jälkeen) ja perustelut kuluille yrityksen johdolle. Laadimme yhdessä johdon kanssa 2-4 tunnin työpajassa asian kuntoon !

Palvelumme, mukaan lukien Azure AI -projektit, on suunniteltu purkamaan suuryrityskonsultoinnin jäykkä ja kallis malli. Tarjoamme asiantuntijuuden, jonka yrityksesi tarvitsee, juuri silloin kun se sitä tarvitsee:

Tarkasti kohdennettu asiantuntijuus: Et palkkaa kokopäiväistä asiantuntijaa. Sen sijaan ostat tarvitsemasi roolin (AI Product Manager, AI Solutions Architect tai AI/ML Engineer) ja valitsemasi määrän konsultaatiotunteja (alkaen vain 99,00 €). Tämä poistaa riskin kalliista, alikäytetystä asiantuntijasta ja ratkaisee budjettiongelman.

Selkeä tiekartta ja ROI: Tarjoamme AI Product Managerin roolin, joka auttaa määrittelemään liiketoimintalähtöisen vision ja mittarit (kohta 1 ja 7). He varmistavat, että projektisi tuottaa mitattavaa arvoa ja vastaa yrityksen todellisia tavoitteita.

Hallittu pilviratkaisu: Valitsemalla Azure AI Foundry -projektin saat avuksesi kokeneen AI Solution Architectin ja AI/ML Engineerin. He vastaavat teknisestä suunnittelusta ja toteutuksesta Azure-ympäristössä, välttäen hukkaan menevät kustannukset ja turhauttavat aloitusvaikeudet (kohta 3).

Omistajuus ja tieto jäävät taloon: Kaikki toimeksiannon aikana luodut immateriaalioikeudet (IPR) siirtyvät asiakkaalle. Tämä ratkaisee myyjäriippuvuuden pelon (kohta 5) ja takaa, että tieto ja ratkaisun omistajuus jäävät tiimillenne (kohta 6).

Nopeat, konkreettiset tulokset: Palvelumme ytimessä on valitun implementaatioprojektin suunnittelu ja toteutus. Keskitymme tekemiseen emmekä pelkkään konsultointiteatteriin, tarjoten konkreettisia tuloksia tiukalla budjetilla (kohta 4).

Lifetime.fi Agent Engineering tekee tekoälystä PK-yrityksille saavutettavan, hallittavan ja taloudellisesti järkevän investoinnin.

Kokeile tuotetta : Azure Engineering

Lähteet käsittelevät tekoälyagenttien kehittyvää alaa, keskittyen erityisesti niiden autonomia-asteisiin ja soveltamiseen eri toimialoilla. Useat lähteet esittelevät hierarkkisia luokituksia, kuten kuusitasoisen taksonomian data-agenteille (L0-L5) ja kyberturvallisuuden viisitasoisen autonomian kehyksen, jotta vältettäisiin terminologinen epäselvyys ja parannettaisiin ihmisen ja tekoälyn välistä yhteistyötä.

Tekoälyagentit, jotka on usein rakennettu suurten kielimallien (LLM) varaan, määritellään tavoitteisiin suuntautuneiksi ohjelmistoiksi, jotka pystyvät suunnittelemaan, kutsumaan työkaluja ja mukautumaan tehtävän suorittamiseksi.

Keskeinen teema on siirtyminen reaktiivisista järjestelmistä osittain autonomisiin toimeenpanijoihin (kuten L2) ja edelleen kohti ehdollisen autonomian tasoa (L3), jossa agentit pystyvät itsenäiseen orkestrointiin. Lähteet korostavat myös luottamuksen, valvonnan ja selitettävyyden merkitystä tässä kehityksessä, erityisesti kun harkitaan agenttien kykyä "älykkääseen tottelemattomuuteen" ja integroitumista kriittisiin yritysprosesseihin, kuten kyberturvallisuuskeskuksiin ja datan hallintaan.

Read More

This document outlines an affordable, high-performance "Llama Stack" architecture for Small to Medium Enterprises (SMEs) using Google Cloud Run, GCS-backed ChromaDB, and Groq API. The core philosophy is to avoid paying for idle GPUs by outsourcing inference to Groq's LPUs, using Cloud Run for stateless orchestration that scales to zero, and a GCS-backed ChromaDB for cost-effective vector storage. This approach significantly reduces monthly costs to approximately $14.33 for 10,000 queries, compared to thousands of dollars for traditional GPU infrastructure. Trade-offs include data freshness (batch updates), cold start latency (mitigable with min-instances), and scalability limits for the vector database (up to a few gigabytes). The document concludes that this stack is the optimal choice for SMEs entering the Generative AI space due to its cost-effectiveness, scalability, and operational simplicity.

“Suunnittelemme liiketoimintaanne uudelleen – agenttien avulla - yksityisesti toteutettuna.”

“Luo liiketoiminnalle täysin uusia kyvykkyyksiä (Prowes) ja taitoja (Skills).”

“Toimii siellä, missä ihmiset jo ovat – mutta tekee täysin uutta - vapauttaa resursseja.”

"Suunnittelemme liiketoimintaanne uudelleen – tekoälyn ja datateknologian avulla" - Lifetime World. Missiona liiketoiminnan uudelleenkirjoituksen prosessi.

"Luo liiketoiminnalle täysin uusia kyvykkyyksiä ja taitoja" - toimitusjohtajan näkemys tekoälyllä saavutettavissa olevista kilpailuedusta.

"Toimii siellä, missä ihmiset jo ovat – mutta tekee täysin uutta" - Lifetime Agent -integraatiotuotteet ja Firehorse automatisointituotteet.

"Turvallisuus ja compliance sisäänrakennettu" - NIS 2, EU Data Act, EU AI Act -valmiudet ja osaaminen.

"Kontekstitekniikka mukautuu juuri sinun liiketoimintaasi" - Räätälöinti vs. yleiset mallit.