(LSJ) Our Cloud Consultancy now with Kubernetes Engineering

/Executive Summary

According to industry analyst firm Gartner, “By 2025, more than 90% of global organizations will be running containerized applications in production.”

Application Container Market to Reach USD 8.2 Billion by 2025

“The promise of container technologies to increase developer speed, efficiency and portability across hybrid infrastructures, as well as microservices, are all driving growth,” said Jay Lyman, 451 Research Principal Analyst.

“Broader and deeper vendor participation along with increasing enterprise use indicate this market will continue to grow and as that growth continues, consolidation in the market is likely.”

What are the engineering roles from Lifetime Group?

Lifetime Group consulting offers cloud native development and engineering based on containerization.

Roles and Skills Available from Lifetime Group

Product Owner

Product owner is the containers engineering team lead, and she/he can lead the product development and create its roadmap, steer containerization, and manage operations with CI/CD/CD builds pipeline management of the product life cycle.

Project Manager

Agile methodology skills enabled Project Manager is suitable for many enterprise customer cases. On-site or remotely. Add Cloud Ready Teams of your choice.

cloud native / Kubernetes / engineer

Lifetime Containerization engineering.

Cloud Developer / GitHub Actions

Using sidecar technologies like dapr it is easier to manage multicloud multicoding language environments.

Cloud Security Engineer

Simplify complex and distributed environments across on-premises, edge, and multicloud.

Azure Arc enabled Kubernetes can enable an end-to-end GitOps flow on clusters deployed outside of Azure to allow infrastructure and application consistency and governance across multi-cloud and on-premises environments.

Deploy and manage multiple Kubernetes configurations using GitOps on Arc enabled Kubernetes cluster.

Site Reliability Engineer (SREs)

How do we create value?

Lifetime Group offers solutions to enterprise customers who want to stay on the competitive advantage and use modern cloud computing solutions with optimum pricing point. We offer better OPEX and migrate from old CAPEX based solutions into new.

Serverless technologies combined with containerization offers next level capabilities to reduce costs when working with workloads on the cloud.

the release of Knative 1.0 reach an important milestone made possible thanks to the contributions and collaboration of over 600 developers. The Knative project was released by Google in July 2018, and it was developed in close partnership with VMWare, IBM, Red Hat, and SAP. Over the last 3 years, Knative has become the most widely-installed serverless layer on Kubernetes.

kNative 1.0 is out ! Learn kNative #Knative #Istio #Kubernetes

KEY TAKEAWAYS:

The use of containers in production has increased to 92%.

Kubernetes use in production has increased to 83%.

There has been a 50% increase in the use of all CNCF projects.

Usage of cloud native tools:

• 82% of respondents use CI/CD pipelines in production.

• > 50% of respondents use Serverless technologies in production.>50 % of respondents use a service mesh in production.

• 55% of respondents use stateful applications in containers in production.

Container Management Platforms will emerge in large scale at 2022.

Amazon Elastic Container Service (Amazon ECS)

AWS Fargate is a serverless, pay-as-you-go compute engine that lets you focus on building applications without managing servers, and improves security through application isolation by design.

Elastic Container Service is used extensively within Amazon to power services such as Amazon SageMaker, AWS Batch, Amazon Lex, and Amazon.com’s recommendation engine, ensuring ECS is tested extensively for security, reliability, and availability.

Container Security

The Container Security market players 2021 include

Data Management with Kubernetes

Kubernetes allow for storage volumes mounted into the pod, and then for the volumes managed directly within Kubernetes. There are many ways to manage state and complex, data-intensive workloads in Kubernetes.

Database replicas aren’t interchangeable like other stateless containers, they have unique states.

Databases require tight coordination with other nodes running the same application to ensure version so they require careful coordination.

A cloud native, globally-distributed SQL database.

MySQL and PostgreSQL-compatible relational database built for the cloud.

Azure Cosmos DB serverless lets you use your Azure Cosmos account in a consumption-based fashion where you are only charged for the Request Units consumed by your database operations and the storage consumed by your data. Serverless containers can serve thousands of requests per second with no minimum charge and no capacity planning required.

A fully managed, distributed database optimized for heavy workloads and fast-growing web and mobile apps, IBM Cloudant is available as an IBM Cloud® service with a 99.99% SLA. Cloudant elastically scales throughput and storage, and its API and replication protocols are compatible with Apache CouchDB for hybrid or multicloud architectures.

Microservice building blocks for cloud and edge from DAPR.

Enterprise applications running on Kubernetes have non-negotiable business requirements like high availability, IAM, data security, backup and disaster recovery, strict performance SLAs and hybrid/multi-cloud operations.

Container Monitoring

Container monitoring is the activity of continuously collecting metrics and tracking the health of containerized applications and microservices environments, in order to improve their health and performance and ensure they are operating smoothly.

CA Technologies (US),

AppDynamics (US),

Splunk (US),

Dynatrace (US),

Datadog (US),

BMC Software (US),

Sysdig (US),

SignalFx (US),

Wavefront (US),

CoScale (Belgium)

Container Management 2022

The four leading vendors of Kubernetes Management platforms

SUSE RANCHER 2.6

RED HAT OPENSHIFT

Google Anthos 1.x

Enterprise-grade container orchestration and management service.

Automate policy and security at scale.

Fully managed service mesh with built-in visibility.

Modernizing your security for hybrid and multi-cloud deployments.

Kubernetes, also known as K8s, is an open-source system for automating deployment, scaling, and management of containerized applications.

Kubernetes business case

With containers it is easier to manage evolving complexity to keep apps running at all times of their lifecycle.

with Kubernetes

Organisation can reach higher delivery performance

scale up and down Apps and their versions /instances

With Containers Agile application creation and deployment with increased ease and performance of container image creation and application delivery compared to

Virtual Machine images

traditional deployment use cases.

Roll out new versions of Apps; Cloud ready teams can deliver

Continuous development,

Continuous integration, and

Continuous deployment.

provide reliable and frequent container images build and deployment with quick and easy and secure rollback capability (due to image immutability).

move Apps to another location if a machine fails.

offer resources fairly among different apps, also to non-kubernetes workloads.

Create abstractions built on open source community tooling and sourcing.

Multicloud technology stack (IDC 2019)

NextGen Security and Observability for Kubernetes

Secure the container clusters.

Control access to the resources.

Plan, build and provide a secure software development process.

Build a secure DevOps culture with a secure sourcing partner who can manage CI /CD for your Container images and related services.

The Open Source Lithops Framework for serverless

How serverless computing can be easily used for a broad range of scenarios, like high-performance computing, Monte Carlo simulations, Big Data pre-processing and molecular biology?

How to connect existing code and frameworks to serverless?

The open source Lithops (https://lithops-cloud.github.io/) framework, that introduces serverless with minimal effort, and its new fusion with serverless computing brings automated scalability and the use of existing frameworks.

Lithops, a novel toolkit that enables the transparent execution of unmodified, regular Python code against disaggregated cloud resources. Lithops supports hybrid execution environments of using Kubernetes, Apache OpenWhisk plus all serverless computing offerings.

Lithops for knowledgeable Python developers, keeps interfaces simple and consistent, and provides access transparency to disaggregated storage and memory resources in the cloud. Further, its multicloud-agnostic architecture ensures portability and overcomes vendor lock in. Altogether, this represents a significant step forward in the programmability of the cloud.

#cncf #lithops #pythondevelopers #serverlesscomputing #multicloud #openwhisk #k8s #cloudagnostic #ibmcloudforum #awsinfrastructure #azurestack

Are you a cloud native or

a cloud migrant?

Enterprises have adopted the “cloud-native” agile mindset;

reorganizing people, processes and workflows, and

creating applications based on microservices, containers, kNative, Serverless.

Containers in serverless application architecture.

Enterprise Architecture built on Containers and Sidecars

Q: What are the key challenges in managing multicloud microservices based containersated applications?

A: The adoption of microservices-based apps in K8s add the number of disparate clusters that require consistent secure management;

cluster-by-cluster approach becomes insufficient and error-prone so there is need for automate provisioning, managing, operating K8s cluster environments while keeping full-stack observability.

How to plan and create and manage multicloud applications?

What are the cloud optimization technologies?

Fleet of Containers: How to manage thousands of containers efficiently?

How secure containers, and plan for any outages?

How to gain comprehensive visibility, observability?

Cloud Native CI /CD Tools; the serverless containers application development

Foundations You need to know

Ecosystem

1. Apache Software Foundation

2. Cloud Native Computing Foundation

3. CNCF Projects: Kubernetes – Prometheus – fluentd – containerd

4. The Cloud Native Landscape

Kubernetes learning

1. Begin the Kubernetes Basics

2. Find out the Kubernetes Benefits

3. Terminology: Pod, Service, Deployment, Cluster

4. Join Communities and participate events.

Amazon ECR

Continuous Integration, Delivery, and Deployment pipeline from Amazon Web Services.

AWS CodePipeline

Where to find information?

Learn more about Serverless at Serverless Land.

What happens and needs to be done when Lambda function has grown into production in 100 days?

Best Practices for growing a Serverless application by ⛷️ Ben Smith.

#zerotrustsecurity

#aws #consulting #serverless Link

Learn Serverless from Google Opensource.

Install and learn Docker Desktop and Ubuntu Linux on your desktop

Install Git, Github Desktop and begin building your repository knowledge.

Setup local K8s and all the tooling to Visual Code along with Cloud CLIs. kubernetes/minikube

Setup local Openshift minishift

MicroK8s: https://microk8s.io/

Lightweight Kubernetes k3s distribution from civo.com

Kubernetes is available on Docker): https://kind.sigs.k8s.io/

Learn more with Katacoda

- The scenarios and a Playground cluster with master and one node to try out.

Amazon Elastic Container Registry for Images.

Learn to Managed Kubernetes AKS, EKS, GKS

Open Source Project: Lithops Lightweight Optimized Serverless Processing.

MQTT: The Standard for IoT Messaging

MQTT is an OASIS standard messaging protocol for the Internet of Things (IoT). It is designed as an extremely lightweight publish/subscribe messaging transport that is ideal for connecting remote devices with a small code footprint and minimal network bandwidth. MQTT today is used in a wide variety of industries, such as automotive, manufacturing, telecommunications,

try out the vmWARE Tanzu Community edition.

VMware Tanzu Community Edition (GitHub) is a full-featured, easy to manage Kubernetes platform for learners and users on your local workstation or your favorite cloud. Tanzu Community Edition enables the creation of application platforms: infrastructure, tooling, and services providing location to run applications and enable positive developer experiences.

The first cloud native service provider powered only by Kubernetes; civo.com

vmWARE Tanzu Community Edition

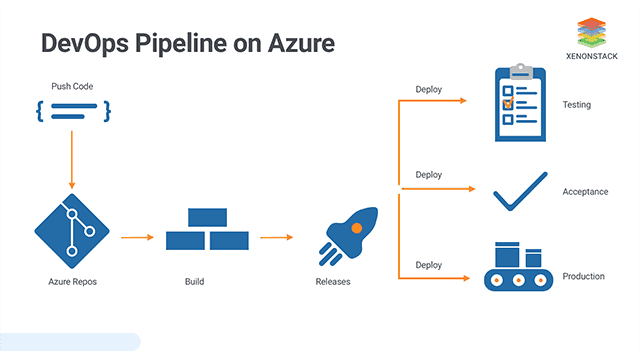

CI / CD secure pipeline management

Gitlab Ultimate for Cloud Packs

Microsoft DevOps

Atlassian ITSM for JIRA

The Kubernetes network model

specifies:

Every pod gets its own IP address.

Containers within a pod share the pod IP address and can communicate freely with each other.

Pods can communicate with all other pods in the cluster using pod IP addresses (without NAT).

Isolation (restricting what each pod can communicate with) is defined using network policies

As a result, pods can be treated much like VMs or hosts (they all have unique IP addresses), and the containers within pods very much like processes running within a VM or host (they run in the same network namespace and share

an IP address).

What are Kubernetes Services?

Kubernetes Services provide a way of abstracting access to a group of pods as a network service. The group of pods is usually defined using a label selector. Within the cluster the network service is usually represented as virtual IP address, and kube-proxy load balances connections to the virtual IP across the group of pods backing the service. The virtual IP is discoverable through Kubernetes DNS. The DNS name and virtual IP address remain constant for the life time of the service, even though the pods backing the service may be created or destroyed, and the number of pods backing the service may change over time.

Kubernetes Services can also define how a service accessed from outside of the cluster, for example using

a node port, where the service can be accessed via a specific port on every node

or a load balancer, whether a network load balancer provides a virtual IP address that the service can be

accessed via from outside the cluster.

creating Devops culture in organisation

Kubernetes container runtimes

TIP: You need to install a container runtime into each node in the cluster so that Pods can run there.

Containerd - An industry-standard container runtime with an emphasis on simplicity, robustness and portability.

CRI-O - LIGHTWEIGHT CONTAINER RUNTIME FOR KUBERNETES

Docker engine

See Kubernetes runtimes Documentation for more information.

Lifetime studios deliver CI / CD pipeline management as a service with IBM CLOUD PAKS. picture: lifetime studios on gitlab ultimate.

Using container image support for AWS Lambda with AWS SAM

by Eric Johnson

CDF Continuous Delivery Projects

Tekton Cloud Native CI/CD

Jenkins X is an open source CI/CD solution for modern cloud applications on Kubernetes. Jenkins X provides pipeline automation, built-in GitOps and preview environments to help teams collaborate and accelerate their software delivery at any scale.

Spinnaker is an open source, multi-cloud continuous delivery platform for releasing software changes with high velocity and confidence. Created at Netflix, it has been battle-tested in production by hundreds of teams over millions of deployments. It combines a powerful and flexible pipeline management system with integrations to the major cloud providers.

supports multi-cloud

Automated Releases using deployment pipelines that run integration and system tests, spin up and down server groups, and monitor your rollouts. Trigger pipelines via git events, Jenkins, Travis CI, Docker, CRON, or other Spinnaker pipelines.

Built-in Deployment Best Practices.

Tekton is a set of shared, open source components for building CI/CD systems. It modernizes the Continuous Delivery control plane and moves the brains of software deployment to Kubernetes. Tekton’s goal is to provide industry specifications for CI/CD pipelines, workflows and other building blocks through a vendor neutral, open source foundation.

Screwdriver treats Continuous Delivery as a first-class citizen in your build pipeline. Easily define the path that your code takes from Pull Request to Production.

Screwdriver’s architecture uses pluggable components under the hood to allow you to swap out the pieces that make sense for your infrastructure. Swap in Postgres for the Datastore or Jenkins for the Executor.

You can even dynamically select an execution engine based on the needs of each pipeline. For example, send golang builds to the kubernetes executor while your iOS builds go to a Jenkins execution farm.

Ortelius is an open source project that aims to simplify the implementation of microservices. By providing a central catalog of services with their deployment specs, application teams can easily consume and deploy services across cluster.

Ortelius tracks application versions based on service updates and maps their service dependencies eliminating confusion and guess work. Unique to Ortelius is the ability to track your microservice inventory across clusters mapping the differences. Ortelius serves Site Reliability Engineers and Cloud Architects in their migration to and ongoing management of a microservice implementation.

Jenkins is the leading open source automation server supported by a large community of developers, testers, designers and other people interested in continuous integration, continuous delivery and modern software delivery practices.

Built on the Java Virtual Machine (JVM), it provides more than 1,500 plugins that extend Jenkins to automate with practically any technology software delivery teams use.

Quarkus A Kubernetes Native Java stack tailored for OpenJDK HotSpot and GraalVM, crafted from the best of breed Java libraries and standards.

CNCF serves as the vendor-neutral home for many of the fastest-growing open source projects, including Kubernetes, Prometheus, and Envoy.

Examining DevSecOps ready solution

VMWare Tanzu Advanced

Simplify and secure the container lifecycle at scale - and speed app delivery.

4 API development challenges that teams are facing

API Standardization and Governance through API lifecycle

Enabling and testing Collaboration Across API Workflows

Predicting Security Challenges and securing Performance & Quality Assurance

Avoiding API Security Challenges

Build on Microsoft Windows 10 and deploy Kubernetes with microk8s.

Are you looking for a Kubernetes solution to run on your Windows machine? MicroK8s is a lightweight, CNCF-certified distribution of Kubernetes for Linux, Windows and macOS. The single-package installer includes all Kubernetes services, along with a collection of carefully selected add-ons.

MicroK8s is especially appropriate for use-cases where resources are limited, such as on a Microsoft Windows 10 workstation with Ubuntu Terminal, IoT device or at the edge device.

MicroK8s provides the same security support, workload portability and lifecycle automation as Charmed Kubernetes, while further simplifying the installation and configuration process to get users up-and-running as quickly as possible.

Monitoring

monitor & autodiscover service instances automatically with the leading open-source monitoring solution

(GitHub The Prometheus monitoring system and time series database).

Service Mesh Management

A service mesh is a dedicated infrastructure layer for handling service-to-service communication in a microservices environment.

To setup and manage service mesh there are couple good choices. One great project is manage service mesh with Istio.io (GitHub Istio Connect, secure, control, and observe services). Reading: The Service Mesh: What Every Software Engineer Needs to Know about the World's Most Over-Hyped Technology.

A standard interface for service meshes on

A service mesh is a dedicated infrastructure layer for handling service-to-service communication in a microservices environment.Service Mesh Interface (SMI) is a widely-adopted open specification that defines a set of APIs for the most common service mesh use cases (traffic policy, telemetry, and shifting) and enables interoperability of service meshes.

Project Linkerd

Linkerd is a service mesh for Kubernetes. It makes running services easier and safer by giving you runtime debugging, observability, reliability, and security—all without requiring any changes to your code.

Project Calico

Calico is an open source networking and network security solution for containers, virtual machines, and native host-based workloads.

Project Grafana

The Grafana Open source project was started by Torkel Ödegaard in 2014.. It allows Administrator to query, visualize and alert on metrics and logs no matter where they are stored.

Grafana has pluggable data source model and comes bundled with rich support for many of the most popular time series databases like Graphite, Prometheus, Elasticsearch, OpenTSDB and InfluxDB. It also has built-in support for cloud monitoring vendors like

Google Stackdriver,

Amazon Cloudwatch, Amazon CloudWatch is a monitoring and observability service built for DevOps engineers, developers, site reliability engineers (SREs), and IT managers.

Microsoft Azure

MySQL

Postgres

Grafana can combine data from multiple data sources into a single dashboard.

Service Mesh Management

More than half of large corporations are running Service mesh in production.

The most used Service Meshes

read 9 open-source service meshes compared

manage service mesh with Istio.io (GitHub Istio Connect, secure, control, and observe services).

Cloud Native Software Development

Accelerate engineering productivity and simplify operations with end-to-end tooling. Automatically build, test, deploy, and manage your code changes across Google Kubernetes Engine and Cloud Run for a serverless runtime. Lifetime consulting is Google partner.

Increase observability ;

code review frequency, tool usage, and code quality satisfaction. application; application container; application software packaging; container; container security; isolation; operating system virtualization; virtualization.

I love logs; logs aggregation is easier only when we use container platform.

enable self-managed teams without losing corporate compliance.

have improved cost management. To deliver reliable 5G services, one way operators can improve application performance and reduce latency is by extending telco cloud infrastructure from their network core to the edge.

Deliver closer to the customers, devices, and data sources. 5G is expected to enable unprecedented levels of data capture, processing and storage at the edge - allowing enterprises to deliver new services such as digital healthcare, smart traffic management, connected and autonomous vehicles, machine sensor processing and pre-emptive maintenance, and real-time analytics.

What is Kubernetes?

Kubernetes is a system for managing container-based applications. Kubernetes empowers developers to utilize new architectures like microservices and serverless that require developers to think about application operations in a way they may not have before. These software architectures can blur the lines between traditional development and application operations, fortunately, Kubernetes also automates many of the tedious components of operations including deployment, operation, and scaling. For developers Kubernetes opens a world of possibilities in the cloud, and solves many problems, paving the way to focus on making software. (Source: Red Hat Dev Nation).

Kubernetes, commonly stylized as k8s is an open-source container-orchestration system for automating computer applications deployment, scaling, and managing applications DevOps, security, availability, data gravity. Kubernetes can be run in major cloud computing platforms and on local Linux Ubuntu machines. (Source: Wikipedia).

What are databases built for Kubernetes?

CockroachDB on Kubernetes

CockroachDB is the only database architected and built from the ground up to deliver on the core distributed principles of atomicity, scale and survival so you can manage your database in Kubernetes, not along the side of it.

Using StatefulSets, CockroachDB is a natural for deployment within a Kubernetes cluster. Simply attach storage however you like and CockroachDB handles distribution of data across nodes and will survive any failure.

Kubernetes networking

Kubernetes has become the de facto standard for managing containerized workloads and services. By facilitating declarative configuration and automation, it massively simplifies the operational complexities of reliably orchestrating compute, storage, and networking. The Kubernetes networking model is a core enabler of that simplification, defining a common set of behaviors that makes communication between microservices easy and portable across any environment.

Sources

Kubernetes is boring, @smarterclayton, Red Hat Dev Nation Day 2020,

How close are we to 5G edge cloud?, Red Hat Blog).

Wartsila maritime webinar October 2020.

NIST Special Publication 800-190 Application Container Security Guide.

Kubernetes networking Guide. Tigera 2020.

Clean hydrogen ('renewable hydrogen' or 'green hydrogen') is produced by electrolysis of water with renewable electricity.

Kubernetes Solution types

Vanilla Kubernetes

Platform-as-a-Service

Public cloud Kubernetes distributions

Managed Kubernetes Solutions

Enterprise Kubernetes Platforms

The dev-focused platforms prioritise ease-of- use for developers.

The ops-focused platforms generally provide more advanced operational controls, often focused on ensuring the reliability and availability enterprises need.

Top Container-specific host OS (see link)

A container-specific host Linux OS (link) is a minimalist OS explicitly designed to only run containers, with all other services and functionality disabled, and with read-only file systems and other hardening practices employed. When using a container-specific host OS, attack surfaces are typically much smaller than they would be with a general-purpose host OS, so there are fewer opportunities to attack and compromise a container-specific host OS. Accordingly, whenever possible, organizations should use container-specific host OSs.

VMWARE TANZU Community edition is free open-source container management platform.

Cybersecurity Standards

ISA/IEC 62443 standard

provides a flexible framework to address and mitigate current and future security vulnerabilities in industrial automation and control systems (IACSs). Operational Technology (OT)

ISO/IEC 27001 INFORMATION SECURITY MANAGEMENT

provides requirements for an information security management system (ISMS), though there are more than a dozen standards in the ISO/IEC 27000 family. Following them enables organizations of any kind to manage the security of assets such as financial information, intellectual property, employee information, and any information entrusted by third parties. Information Technology (IT).

The Challenges of Container based software ecosystem

The container ecosystem is immature and lacks operational best practices. Infrastructure and operations leaders are accelerating container deployment in their production environments.

The adoption of containers and Kubernetes is increasing for

1. legacy applications modernization and

2. new fresh built cloud-native applications.

3. 5G, 5,5G, 6G and the Edge services offers an opportunity for use of containers that no one can have afford to let go.

Containers are solution to manage complexity yet the solution offers an challenging operating environment.

As cloud native roadmap evolve these challenges will be won including innovation management challenge.

While there are commonalities in the types of challenges enterprises encounter with Kubernetes, there’s no true one-size-fits-all way to manage them but rather a number of potential approaches, each with its own advantages and drawbacks.

(Source: Five strategies to accelerate Kubernetes deployment in the enterprise, An Canonical Whitepaper).

What are the benefits of using Kubernetes ecosystem tools and community services?

As Kubernetes adoption spreads from early adopters to enterprises, many IT CIOs are discovering that while containers and Kubernetes offer advantages around development, velocity, agility, and cost management, they also create a new set of challenges to overcome. The right approach to taming Kubernetes will depend on the size, technical sophistication, and business goals of each enterprise and the teams tackling the applications challenges.

Kubernetes engineering challenges include

Security; How to manage and secure containers that run corporate workloads?

Observability; surfaces

OS-level information and metrics,

application health, APIs and messaging.

resiliency

portability

debug ; code reviews, tools used, and code quality assurance.

alerts ; messaging; ops

reliability

effortless use

low-coding

data gravity

data privacy

data consistency

compliance with common security benchmarks

GDPR compliance in the EU

knowledge sharing

cloud ready teams availability

Kubernetes engineering

architectural design.

What Kubernetes business cases can you recommend ?

We here at Lifetime Group have years of experience of Kubernetes and Cloud Computing

Here are few technical alternatives to build on containers based on k8s.

Development with k8s

1. On development side we can use Google kubernetes engine. It is great for Android mobile app projects. Google is original inventor of k8s container technology which are advanced by cloud native CNCF.

2. On development side we can build AWS amplify/cogito that can use AWS EKS fully managed container orchestration.

The AWS Toolkit for Azure DevOps is an extension for hosted and on-premises Microsoft Azure DevOps that make it easy to manage and deploy applications using AWS cloud formation, AWS CodeDeploy, and AWS elastic beanstalk, and build pipeline using Microsoft Azure DevOps. You may also deploy your pipeline using AWS Powershell.

3. We could also use Docker containers and Docker container registry AWS ECR as an alternative solution.

4. Microsoft Azure offers its container Management solution managed service for kubernetes AKS.

5. If the enterprise is managing fleet of vessels carrying hundreds of containers we need Sys ops Kubernetes engineering a fully managed container solution.

Simplify container lifecycle management

Build, store, secure, scan, replicate, and manage container images and artifacts with a fully managed, geo-replicated instance of OCI distribution. Connect across environments, including Azure Kubernetes Service and Azure Red Hat OpenShift, and across Azure services like App Service, Machine Learning, and Batch.

Red Hat OpenShift K8 Registry offers place to manage hundreds of k8 containers and their DevOps environments.

Cybersecurity on Information Technology (IT) and on Operational Technology (OT)

Cybersecurity is no longer simple a technical issue; it is a core survival and resilience & business issue on both technologies; Information technology and Operational technology systems.

Openstack Engineering

Both Red Hat OpenStack Platform and Canonical’s Charmed OpenStack are production-grade commercially supported OpenStack distributions that differ substantially. Those differences result from distinct missions and business models of the companies behind them. While Red Hat provides an OpenStack distribution for enterprises, Canonical’s mission is to deliver an OpenStack distribution that is deployable, maintainable and upgradable economically. (source: Canonical white paper, 2020).

openstack. source openstack.org

Managing multi-cloud complexity with OpenStack

Containers at the Edge

The more organizations can address security risks and challenges in a quantitative manner, the more they will be able to incorporate a broader set of key stakeholders in reducing cyber security risks. (Source; IBM Cybersecurity). Topics include

Risk Quantification

The three major trends influencing Cyber Security:

Risks and opportunities in increasing digitalisation and connectivity;

The Convergence and virtualization/ containersation of information technology (IT) and Operations Technology (OT) systems;

The role of teams in managing secops and systems.

Zero Trust strategy

A successful enterprise digital transformation with the zero trust strategy includes policies on how the enterprise handle its most sensitive data.

Cloud Security

edge computing and AI & deep learning

Artificial intelligence and deep learning applications are at momentum amongst businesses looking for intelligent and efficient ways to run their workloads and applications on the edge.

Many businesses are beginning to look to Edge and IoT solutions using micro Kubernetes to effectively run applications close to the end devices and systems. New technology based solutions at the Edge has the requirements of high performance, flexibility , and scalability.

GitHub / GitOps offers the platform and a community for DevOps for projects and integration of micro services, Serverless, and multi-cloud containers.

Open Source ; developers empower developers. Lifetime Group’s policy is support open source 100%s.

Kubernetes solution types

Resources

ISA/IEC 62443 standard

provides a flexible framework to address and mitigate current and future security vulnerabilities in industrial automation and control systems (IACSs).

ISO/IEC 27001 INFORMATION SECURITY MANAGEMENT

provides requirements for an information security management system (ISMS), though there are more than a dozen standards in the ISO/IEC 27000 family. Following them enables organizations of any kind to manage the security of assets such as financial information, intellectual property, employee information, and any information entrusted by third parties.

#aws #azure #devops #kubernetes #microsoft #docker #cloud #github #gke #istio #prometheus

Enterprise developers face several challenges when it comes to building serverless applications, such as integrating applications and building container images from source. With more than 60 practical recipes, this cookbook helps you solve these issues with Knative–the first serverless platform natively designed for Kubernetes.

Efficiently build, deploy, and manage modern serverless workloads

Apply Knative in real enterprise scenarios, including advanced eventing

Monitor your Knative serverless applications effectively

Integrate Knative with CI/CD principles, such as using pipelines for faster, more successful production deployments

Deploy a rich ecosystem of enterprise integration patterns and connectors in Apache Camel K as Kubernetes and Knative components.

2020 State of the API Report

Want to learn more? Join our Fire-side Chat

Dates:

2.3.2021

9.3.2021

The Cloud Native Computing Foundation’s flagship conference gathers adopters and technologists from leading open source and cloud native communities in Los Angeles from October 12 – 15, 2021. Join Kubernetes, Prometheus, Envoy, CoreDNS, containerd, Fluentd, TUF, Jaeger, Vitess, OpenTracing, gRPC, CNI, Notary, NATS, Linkerd, Helm, Rook, Harbor, etcd, Open Policy Agent, CRI-O, TiKV, CloudEvents and Falco as the community gathers for four days to further the education and advancement of cloud native computing.

Microservices , Continuous Delivery, DevOps and Containers

Operational excellence

security

reliability

performance

cost optimization

Lifetime consultinG offers cloud architect advisory and consultants. Call +358400319010 Risto anton for further information.

Business Cases

High Performance Combination: Amazon EKS, Bottlerocket, and Calico eBPF

Bottlerocket is an open source Linux distribution built to run containers securely in scale by Amazon, it is uniquely tailored to improve stability and performance with a great focus on security. Being built from the ground up using the v5.4 Linux Kernel makes Bottlerocket a great platform to run Calico eBPF.

Calico’s eBPF dataplane offers performance and capability improvements over the standard Linux networking dataplane, such as higher throughput and lower resource usage, and native Kubernetes Service handling (replacing kube-proxy) with advanced features

Code-first environments in containers

it is one thing to learn to dance 💃🏿 🕺🏼 And totally new game to build a dance school with successful dance classes and various dance programs and certificates.

CI/CD in DevOps

Using containers brings flexibility, consistency, and portability in a DevOps culture. One of the essential parts of DevOps is creating Continuous Integration and Deployment (CI/CD) pipelines that deliver containerized application code faster and more reliably to production systems. Enabling canary or blue-green deployments in CI/CD pipelines provides more robust testing of the new application versions in a production system with a safe rollback strategy. In this case, a service mesh helps to do canary deployment in a production systems. Thus, service mesh controls and distributes traffic to different versions of the application with variable percentage of user traffic. It gradually increments the user traffic to newer version of the application. If anything goes wrong while incrementing percentage of user traffic, it aborts and rolls back to previous version.

Gitlab, which provides version controlling, CI/CD pipelines, and DevOps best practices, are used by many AWS customers today in their daily software development cycles. Gitlab brings advantages to visualize and track the CI/CD pipelines when a team deploys containerized applications with canary deployment techniques and service meshes. Existing Gitlab users can also modify their CI/CD pipelines to add a service mesh without changing CI/CD tool. Read tutorial CI/CD with Amazon EKS using AWS App Mesh and Gitlab CI.

Gitlab Ultimate for Cloud Paks

AWS Services that we use for clients

What is Amazon Redshift?

Amazon Redshift is the most widely used cloud data warehouse. It makes it fast, simple and cost-effective to analyze all your data using standard SQL and your existing Business Intelligence (BI) tools. It allows you to run complex analytic queries against terabytes to petabytes of structured and semi-structured data, using sophisticated query optimization, columnar storage on high-performance storage, and massively parallel query execution. Most results come back in seconds. With Redshift, you can start small for just $0.25 per hour with no commitments and scale out to petabytes of data for $1,000 per terabyte per year, less than a tenth the cost of traditional on-premises solutions. Amazon Redshift also includes Amazon Redshift Spectrum, allowing you to run SQL queries directly against exabytes of unstructured data in Amazon S3 data lakes. No loading or transformation is required, and you can use open data formats, including Avro, CSV, Grok, Amazon Ion, JSON, ORC, Parquet, RCFile, RegexSerDe, Sequence, Text, Hudi, Delta and TSV. Redshift Spectrum automatically scales query compute capacity based on the data retrieved, so queries against Amazon S3 run fast, regardless of data set size.

What is Amazon EMR?

Amazon EMR is a web service that enables businesses, researchers, data analysts, and developers to easily and cost-effectively process vast amounts of data. It utilizes a hosted Hadoop framework running on the web-scale infrastructure of Amazon Elastic Compute Cloud (Amazon EC2) and Amazon Simple Storage Service (Amazon S3).

What can I do with Amazon EMR?

Using Amazon EMR, you can instantly provision as much or as little capacity as you like to perform data-intensive tasks for applications such as web indexing, data mining, log file analysis, machine learning, financial analysis, scientific simulation, and bioinformatics research. Amazon EMR lets you focus on crunching or analyzing your data without having to worry about time-consuming set-up, management or tuning of clusters running open source big data applications or the compute capacity upon which they sit.

Amazon EMR is ideal for problems that necessitate the fast and efficient processing of large amounts of data. The web service interfaces allow you to build processing workflows, and programmatically monitor progress of running clusters. In addition, you can use the simple web interface of the AWS Management Console to launch your clusters and monitor processing-intensive computation on clusters of Amazon EC2 instances.

What can I do with Amazon EMR that I could not do before?

Amazon EMR significantly reduces the complexity of the time-consuming set-up, management and tuning of Hadoop clusters or the compute capacity upon which they sit. You can instantly spin up large Hadoop clusters which will start processing within minutes, not hours or days. When your cluster finishes its processing, unless you specify otherwise, it will be automatically terminated so you are not paying for resources you no longer need.

Using this service you can quickly perform data-intensive tasks for applications such as web indexing, data mining, log file analysis, machine learning, financial analysis, scientific simulation, and bioinformatics research.

As a software developer, you can also develop and run your own more sophisticated applications, allowing you to add functionality such as scheduling, workflows, monitoring, or other features.